When Screens Go Quiet: Why Meta's Ray-Ban Display Changes Everything

I tested Meta's new Ray-Ban Display glasses with the Neural Band. This isn't a product review. This is about what happens when technology finally gets out of the way.

I slipped the Ray-Ban Display on, swiped my thumb against my index finger, and watched the tiny rectangle that usually pulls me into endless app rabbit holes simply vanish. The sunglasses stayed. The world stayed. The information I needed stayed. That split second—where distraction turned into a quick glance and then nothing—is the difference between Where we've been versus where we're going.

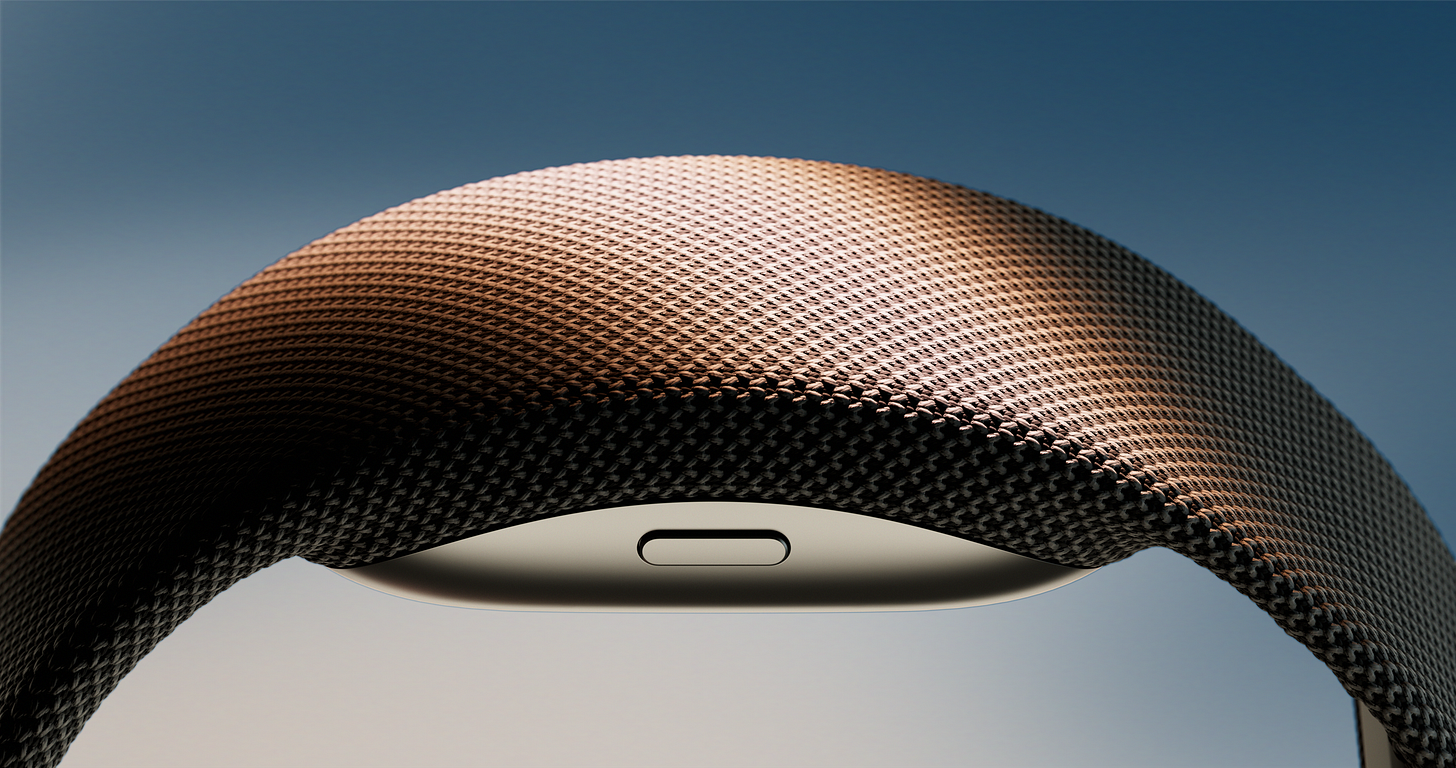

Meta just packaged a full-color display into stylish glasses and paired it with a Neural Band that reads muscle signals from your wrist. That's what the press release says. Here's what that product actually does for people:

In a nutshell… it lets useful information enter your life and leave without hijacking your attention for an hour.

The Real Breakthrough Isn't Technical

The breakthrough isn't pixels or battery chemistry. It's the device getting out of the way.

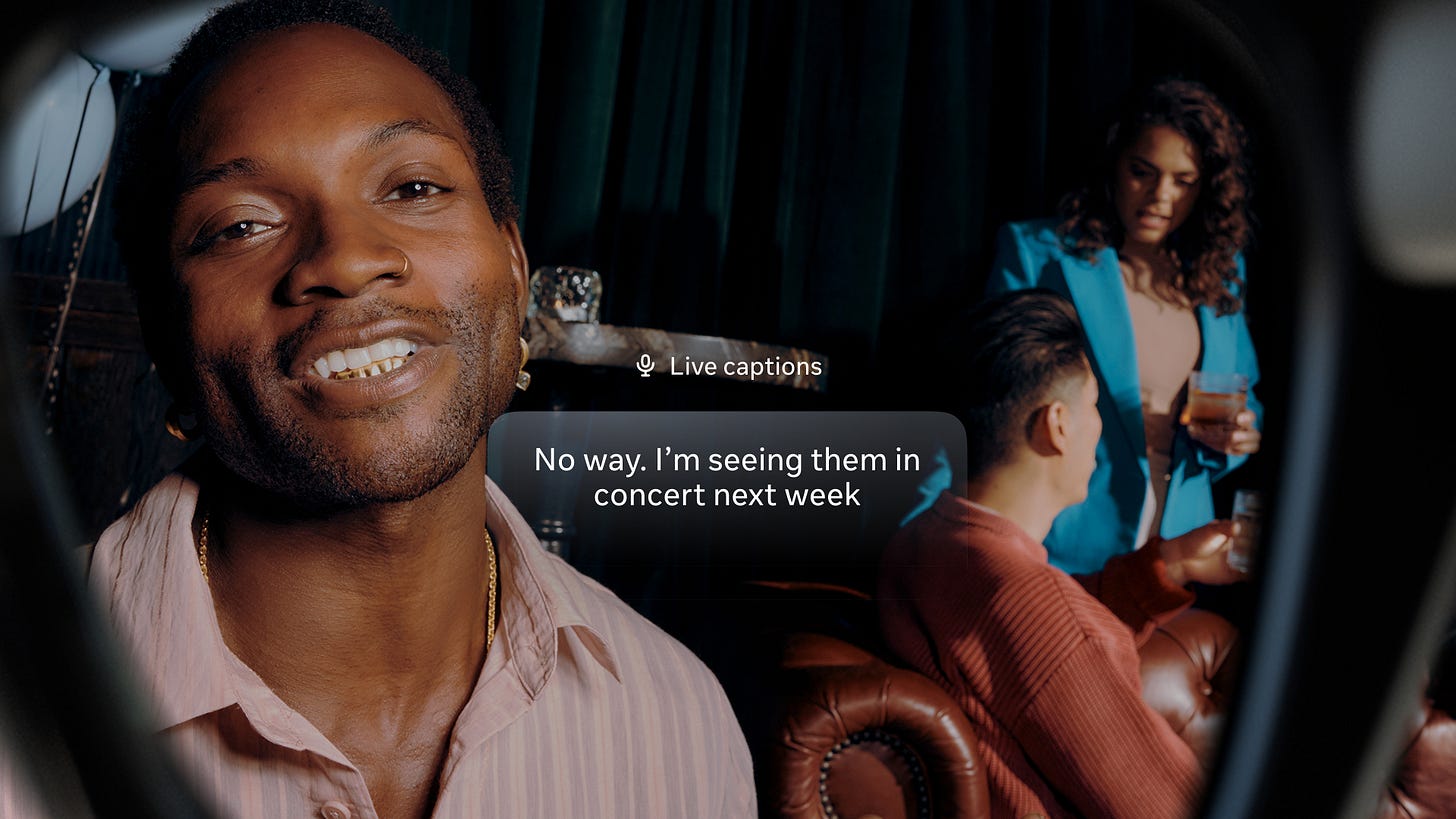

When I need to read a message, I glance. If I need to reply, I make a small, private gesture with my fingers. The display vanishes. I keep standing. My attention returns to the person in front of me, to the sunset, to the walk.

I usually keep my phone on do not disturb because I like staying in control. But every time I open it, those red notification dots pull me into rabbit holes anyway. With these glasses, a quick swipe gesture opens a menu where you can pause notifications right from the lenses. No phone required. I can use the glasses on my own terms, when I choose to, not when algorithms decide I need to be notified.

Phones gave us power. They also trained our attention to orbit rectangular glass. These glasses put the power where it should be: in the moment, when you need it, then gone.

Why This Matters for Accessibility

I live with chronic nerve pain and limited use of my left arm and left hand. Holding devices, scrolling, angling cameras, stabilizing shots—those simple things can become a struggle. The Neural Band reads nervous system signals directly. It turns intention into action without asking me to grip, press, or reach.

Meta tested their algorithms with data from nearly 200,000 participants. That number matters because it means the system learned variance, not just averages. When I asked a Meta engineer if this would work for someone without hands or with different limbs, they said yes—the wristband detects electrical signals that everyone's nervous system creates. The muscle signals fire regardless of limb differences.

Someone with tremors could take clear photos without wrestling a phone. Someone with limb differences could join calls, navigate maps, or call for help without needing a second device. The Neural Band reads muscle signals through finger gestures—thumb against index finger to scroll, thumb-to-index tap for selection, thumb-to-middle finger for other functions. These work for people across different physical capabilities.

We Need New Language for This

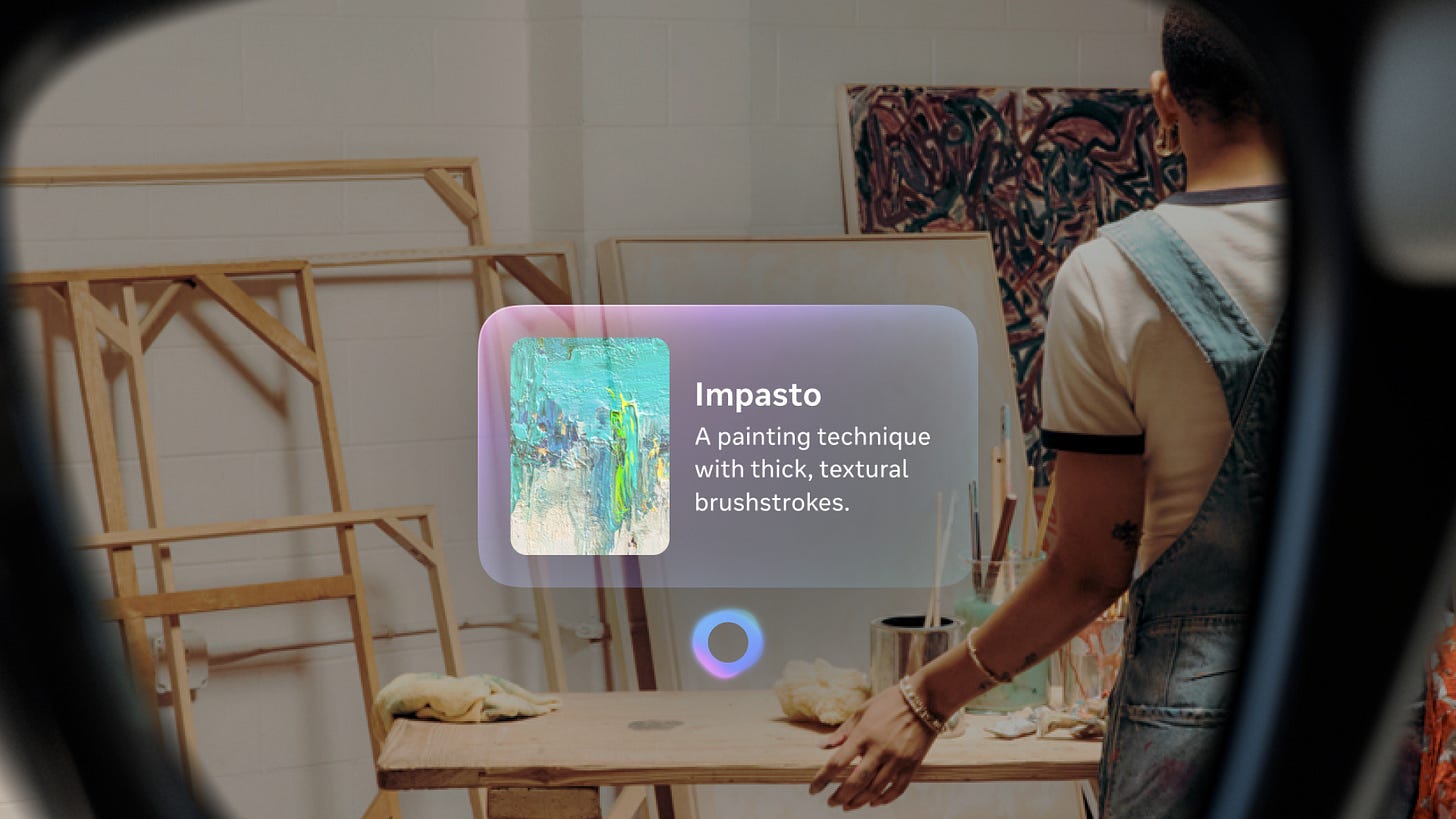

Call it an interface. Call it a projection. Call it whatever you want, but "screen" doesn't work anymore.

A screen is a static object you crane toward. What's inside these lenses behaves like thoughts projected into air. It has no obvious borders. It feels weightless. The imagery sits in your field like a prompt in your head—a stream of consciousness in your space. The monocular design puts information subtly to the side so it never blocks your vision.

Screen doesn't do justice to something that feels more like you're visualizing in your mind's eye and seeing a representation of it. It feels like a dynamic hologram overlaying useful visual effects on reality.

Culture Will Shift Fast and Messy

If people stop pulling phones out in public, eye contact returns. At concerts you see hands in the air, not phone towers blocking views. People experience moments through their own eyes instead of tiny rectangles.

Social norms will adapt. We learned to flip phones face-down as a signal that we're present when seated at a table. We'll invent equivalent rituals for glasses. Maybe people remove them and place them in cases for private conversations. Maybe venues ask for camera covers or storing AI glasses in their cases. I think at Dave Chappelle performances he has everyone stow away their phones in these locked bags because they don't want any recordings. I could see a similar standard happening for glasses maybe.

But there's an uncomfortable side. Putting cameras and microphones on faces changes who can record and who gets recorded. That proximity can create a chilling effect If you are not aware of the capabilities. Luckily, they have a very clear indicator light that turns on whenever the device is capturing video, photos, recording, or streaming.

There might be a future where pulling out a phone becomes as socially awkward as taking a speakerphone call on the bus when you don't have accessibility needs. One trick I usually do in public settings when I'm wearing AI glasses is I ask if it's okay if I record this moment and then the person expects me to pull out a phone but then when I reach for my glasses it informs them they're aware and it allows for that informed consent.

The Biology Question

The display is monocular—only in your right lens. Your eye focuses close when viewing it. If we stare at these displays all day, eye muscles may adapt to short distances more than far ones. The solution is built into the design: use them for quick peeks, not marathon sessions.

I try not to stay staring at my displays for too long and encourage taking walks outside and focusing your eyes far off in the distance just so that the muscles for conversion are relaxed.

The Business Angle That Actually Matters

Meta building hardware changes platform dynamics. For years, the company relied on other companies' hardware ecosystems—desktop computers, then Android and iPhone markets. Owning the device means new ways to monetize beyond ad auctions.

Subscriptions, paid features, direct hardware sales—these could reduce perverse incentives to harvest personal data. Fingers crossed!When companies make money from hardware and services instead of just attention extraction, they can build healthier product economics. That benefits anyone who cares about how technology monetizes human attention.

Looking Forward Five to Ten Years

The future probably won't follow one clear path. Content creators might keep phones since they do much of their work on mobile. But there's also a counter-movement I've been watching—younger people demanding dumb phones, devices with e-ink displays, even landline installations in 2025 because they want less distraction and more direct connection with friends and family.

The costs of specialized devices are dropping fast. We might see more variation in personal technology rather than everyone using the same rectangle. Some people will wear displays, others will use wrist inputs, some will intentionally choose simpler devices. The modalities of input will change, but the conversation about what we actually want from our technology is still happening.

This is my best guess based on what I'm seeing, not a full prediction. Different people will likely choose different approaches to staying connected while remaining present in their lives.

What Happens Next

The Ray-Ban Display starts at $799, includes the Neural Band, and launches September 30 at select retailers. If you can demo them, try them on and notice the difference between devices that demand attention and ones that hand you information.

Practice the peek. Demand clear camera indicators. Ask for hardware privacy controls. Push designers to keep the default experience subtle and ephemeral.

I feel optimistic because this represents rare innovation. It doesn't rebrand the same rectangle. It changes the shape of the problem. After years of seeing technology rehashed with different branding, this feels genuinely new—a completely different modality.

Reality is subjective anyway. If everyone has lenses that give them different views of reality, that's not fundamentally different from everyone staring at different phone feeds. The difference is whether the technology forces you to bend toward it or adapts to how you naturally move through the world.

The best part? There's still a light that comes on when recording. That safety feature matters more than all the technical specifications combined.

If you want deeper breakdowns of the Neural Band science, privacy implications, or how to develop social norms around always-on cameras, let me know which piece you want next. This is just the beginning of the conversation.

That was a ride. What stuck for me wasn’t the specs it’s the shift you called out: tech finally stepping out of the spotlight.

Phones trained us to bow to glass rectangles. Glasses that leave when you’re done? That changes culture more than pixels ever could.